The OpenStack Nova project has the ability to inject files into a guest filesystem immediately prior to booting the virtual machine instance. Historically the way it did this was to setup either a loop or NBD device on the host OS and then mount the guest filesystem directly on the host OS. One of the high priority tasks for Red Hat engineers when we became involved in OpenStack was to integrate libguestfs FUSE into Nova to replace the use of loop back + NBD devices, and then subsequently refactor Nova to introduce a VFS layer which enables use of the native libguestfs API to avoid any interaction with the host filesystem at all.

There has already been a vulnerability in the Nova code which allowed a malicious user to inject files to arbitrary locations in the host filesystem. This has of course been fixed, but even so mounting guest disk images on the host OS should still be considered very bad practice. The libguestfs manual describes the remaining risk quite well:

When you mount a filesystem, mistakes in the kernel filesystem (VFS) can be escalated into exploits by attackers creating a malicious filesystem. These exploits are very severe for two reasons. Firstly there are very many filesystem drivers in the kernel, and many of them are infrequently used and not much developer attention has been paid to the code. Linux userspace helps potential crackers by detecting the filesystem type and automatically choosing the right VFS driver, even if that filesystem type is unexpected. Secondly, a kernel-level exploit is like a local root exploit (worse in some ways), giving immediate and total access to the system right down to the hardware level

Libguestfs provides protection against this risk by creating a virtual machine inside which all guest filesystem manipulations are performed. Thus even if the guest kernel gets compromised by a VFS flaw, the attacker then still has to break out of the KVM virtual machine and its sVirt confinement to stand a chance of compromising the host OS. Some people have doubted the severity of this kernel VFS driver risk in the past, but an article posted on LWN today should serve reinforce the fact that libguestfs is right to be paranoid. The article highlights two kernel filesystem vulnerabilities (one in ext4 which is enabled in pretty much all Linux hosts) which left hosts vulnerable for as long as 3 years in some cases:

- CVE-2009-4307: a divide-by-zero crash in the ext4 filesystem code. Causing this oops requires convincing the user to mount a specially-crafted ext4 filesystem image

- CVE-2009-4020: a buffer overflow in the HFS+ filesystem exploitable, once again, by convincing a user to mount a specially-crafted filesystem image on the target system.

If the user has access to an OpenStack deployment which is not using libguestfs for file injection, then “convincing a user to mount a specially crafted filesystem” merely requires them to upload their evil filesystem image to glance and then request Nova to boot it.

Anyone deploying OpenStack with file injection enabled, is strongly advised to make sure libguestfs is installed to avoid any direct exposure of the host OS kernel to untrusted guest images.

While I picked on OpenStack as a convenient way to illustrate the problem here, it is not unique to OpenStack. Far too frequently I find documentation relating to virtualization that suggests people mount untrusted disk images directly on their OS. Based on their documented features I’m confident that a number of public virtual machine hosting companies will be mounting untrusted user disk images on their virtualization hosts, likely without using libguestfs for protection.

When launching a virtual machine, Nova has the ability to inject various files into the disk image immediately prior to boot up. This is used to perform the following setup operations:

- Add an authorized SSH key for the root account

- Configure init to reset SELinux labelling on /root/.ssh

- Set the login password for the root account

- Copy data into a number of user specified files

- Create the meta.js file

- Configure network interfaces in the guest

This file injection is handled by the code in the nova.virt.disk.api module. The code which does the actual injection is designed around the assumption that the filesystem in the guest image can be mapped into a location in the host filesystem. There are a number of ways this can be done, so Nova has a pluggable API for mounting guest images in the host, defined by the nova.virt.disk.mount module, with the following implementations:

- Loop – Use losetup to create a loop device. Then use kpartx to map the partitions within the device, and finally mount the designated partition. Alternatively on new enough kernels the loop device’s builtin partition support is used instead of kpartx.

- NBD – Use qemu-nbd to run a NBD server and attach with the kernel NBD client to expose a device. Then mapping partitions is handled as per Loop module

- GuestFS – Use libguestfs to inspect the image and setup a FUSE mount for all partitions or logical volumes inside the image.

The Loop module can only handle Raw format files, while the NBD module can handle any format that QEMU supports. While they have the ability to access partitions, the code handling this is very dumb. It requires the Nova global ‘libvirt_inject_partition’ config parameter to specify which partition number to inject. The result is that every image you upload to glance must be partitioned in exactly the same way. Much better would be if it used a metadata parameter associated with the image. The GuestFS module is much more advanced and inspects the guest OS to figure out arbitrarily partitioned images and even LVM based images.

Nova has a “img_handlers” configuration parameter which defines the order in which the 3 mount modules above are to be tried. It tries to mount the image with each one in turn, until one suceeds. This is quite crude code really – it has already been hacked to avoid trying the Loop module if Nova knows it is using QCow2. It has to be changed by the Nova admin if they’re using LXC, otherwise you can end up using KVM with LXC guests which is probably not what you want. The try-and-fallback paradigm also has the undesirable behaviour of masking errors that you would really rather consider fatal to the boot process.

As mentioned earlier, the file injection code uses the mount modules to map the guest image filesystem into a temporary directory in the host (such as /tmp/openstack-XXXXXX). It then runs various commands like chmod, chown, mkdir, tee, etc to manipulate files in the guest image. Of course Nova runs as an unprivileged user, and the guest files to be changed are typically owned as root. This means all the file injection commands need to run via Nova’s rootwrap utility to gain root privileges. Needless to say, this has the undesirable consequence that the code injecting files into a guest image in fact has privileges that allow it to write to arbitrary areas of the host filesystem. One mistake in handling symlinks and you have the potential for a carefully crafted guest image to result in compromise of the host OS. It should come as little surprise that this has already resulted in a security vulnerability / CVE against Nova.

The solution to this class of security problems is to decouple the file injection code from the host filesystem. This can be done by introducing a “VFS” (Virtual File System) interface which defines a formal API for the various logical operations that need to be performed on a guest filesystem. With that it is possible to provide an implementation that uses the libguestfs native python API, rather than FUSE mounts. As well as being inherently more secure, avoiding the FUSE layer will improve performance, and allow Nova to utilize libguestfs APIs that don’t map into FUSE, such as its Augeas support for parsing config files. Nova still needs to work in scenarios where libguestfs is not available though, so a second implementation of the VFS APIs will be required based on the existing Loop/Nbd device mount approach. The security of the non-libguestfs support has not changed with this refactoring work, but de-coupling the file injection code from the host filesystem does make it easier to write unit tests for this code. The file injection code can be tested by mocking out the VFS layer, while the VFS implementations can be tested by mocking out the libguestfs or command execution APIs.

Incidentally if you’re wondering why Libguestfs does all its work inside a KVM appliance, its man page describes the security issues this approach protects against vs just directly mounting guest images on the host

As many readers are no doubt aware, the FOSDEM 2012 conference is taking place this weekend in Brussels. This year I was organized enough to submit a proposal for a talk and was very happy to be accepted. My talk is titled “Building app sandboxes on top of LXC and KVM with libvirt” and is part of the Virtualization & Cloud Dev Room. As you can guess from the title, I will be talking in some detail about the libvirt-sandbox project I recently announced. Richard Jones is also attending to provide a talk on libguestfs and how it is used in cloud projects like OpenStack. There will be three talks covering different aspects of the oVirt project, a general project overview, technical look at the management engine and a technical look at the node agent VDSM. Finally the GNOME Boxes project I mentioned a few weeks ago will also be represented in the CrossDesktop devroom.

Besides these virtualization related speakers, there are a great many other Red Hat people attending FOSDEM this year, so we put together a small flyer highlighting all their talks. In keeping with the spirit of FOSDEM, these talks will of course be community / technically focused, not corporate marketing ware :-) I look forward to meeting many people at FOSDEM this year, and if all goes well, make it a regular conference to attend.

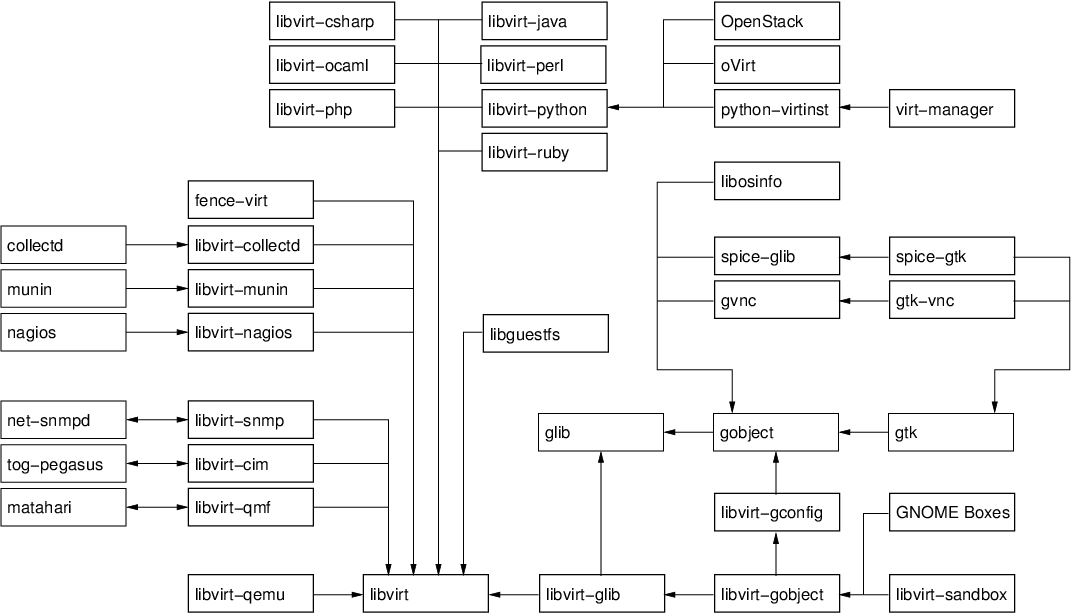

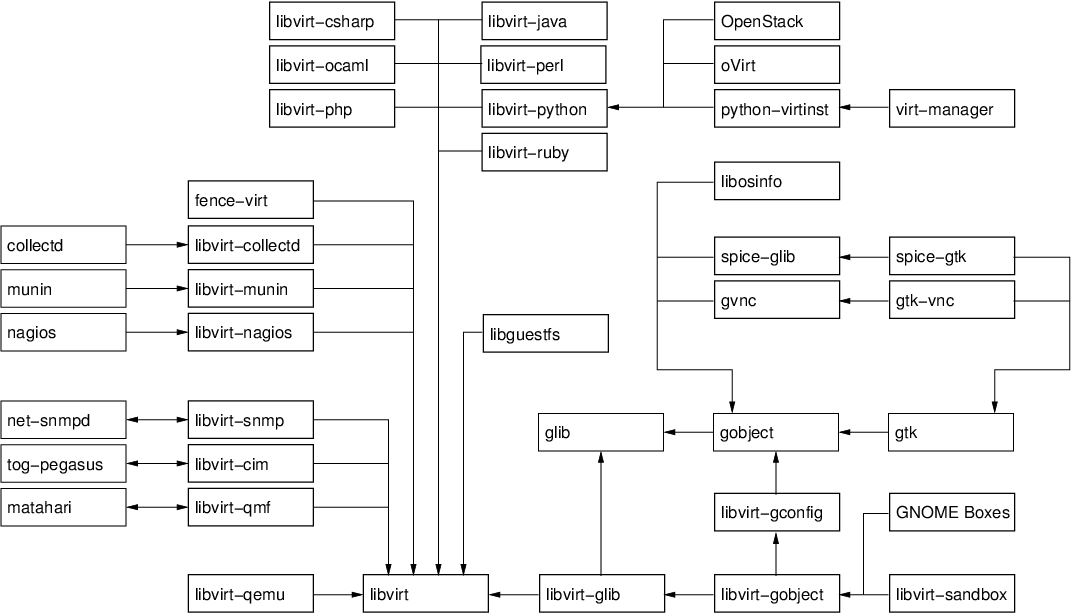

In the five years since the libvirt project started, alot has changed. The size of the libvirt API has increased dramatically; the number of languages you can access the API from has likewise grown to cover most important targets; libvirt has been translated to fit into several other object models; plugins have been developed to bind libvirt to other tools. At the same time many other libraries have grown up alongside libvirt, not least libguestfs, gtk-vnc and more recently spice-gtk. Together all these pieces provide a rich software development platform for people building virtualization management applications. A picture is worth 1000 words, so to keep this blog post short, here is the way I visualize the pieces in the virtualization tools platform, and a selection of the applications built on it (click to enlarge the image)

The base layer

- libvirt: the core hypervisor agnostic management API, coring virtual machines, host devices, networking, storage, security and more

- libvirt-qemu: a small set of QEMU specific APIs, such as the ability to talk to the QEMU monitor, or attach to externally launched QEMU guests. This library builds on top libvirt.

- libguestfs: the library for manipulating and accessing the contents of guest filesystem images. This uses libvirt for some actions internally. libguestfs has its own huge set of language bindings which are not shown in the diagram, for the sake of clarity. It will also soon be gaining a mapping into the GObject type system, which will help it play nicely with other GObject based APIs here.

Language bindings

The language bindings for libvirt aim to be a 1-for-1 export of the libvirt C API into the corresponding language. They generally don’t attempt to change the way the libvirt API looks or is structured. There is generally completely interoperability between all language bindings, so you can trivially have part of your application written in Perl and another part written in Java and play nicely together.

- libvirt-ocaml: a binding into the OCaml functional language

- libvirt-php: a binding into the PHP scripting language

- libvirt-perl: a binding into the Perl scripting language

- libvirt-python: a binding into the Python scripting language, which comes as a standard part of the libvirt package

- libvirt-java: a binding into the Java object language

- libvirt-ruby: a binding into the Ruby scripting language

- libvirt-csharp: a binding into the C# object language

Object mappings

The object mappings are distinct from language bindings, because they will often significantly change the structure of the libvirt API to fit in the requirement of the object system being targeted. Depending on the object systems involved, this translation might be lossless, thus an application generally has to pick one object system & stick with it. It is not a good idea to do a mixture of SNMP and QMF calls from the same application.

- libvirt-snmp: an agent for SNMP that translates from an SNMP MIB to libvirt API calls.

- libvirt-cim: an agent for CIM the translates from the DMTF virtualization schema to the libvirt API

- libvirt-qmf: an agent for Matahari that translates from a QMF schema to the libvirt API

Infrastructure plugins

Many common infrastructure applications can be extended by adding plugins for new functionality. This particularly common with network monitoring or performance collection applications. libvirt can of course be used to create plugins for such applications

- libvirt-collectd: a plugin for collectd that reports statistics on virtual machines

- libvirt-munin: a plugin for collectd that reports statistics on virtual machines

- libvirt-nagios: a plugin for nagious that reports where virtual machines are running

- fence-virt: a plugin for clustering software to allow virtual machines to be “fenced”

GObject layer

The development of a set of GObject based libraries came about after noticing that many users of the basic libvirt API were having to solve similar problems over & over. For example, every application wanted some programmatic way to extract info from XML documents. Many applications wanted libvirt translated into GObjects. Many applications needed a way to determine optimal hardware configuration for operating systems. The primary reasons for choosing to use GObject as the basis for these APIs was first to facilitate development of graphical desktop applications. With the advent of GObject Introspection, the even more compelling reason is that you get language bindings to all GObject libraries for free. Contrary to popular understanding, GObject is not solely for GTK based desktop applications. It is entirely independent of GTK and can be easily used from any conceivable application. If libvirt were to be started from scratch again today, it would probably go straight for GObject as the basis for the primary C library. It is that compelling.

- libosinfo: an API for managing metadata related to operating systems. It includes a database of operating systems with details such as common download URLs, magic byte sequences to identify ISO images, lists of supported hardware. In addition there is a database of hypervisors and their supported hardware. The API allows applications to determine the optimal virtual hardware configuration for deployment of an operating system on a particular hypervisor.

- gvnc: an API providing a client for the RFB protocol, used for VNC servers. The API facilitates the creation of new VNC client applications.

- spice–glib: an API providing a client for the SPICE protocol, used for SPICE servers. The API facilitates the creation of new SPICE client applications.

- libvirt-glib: an API binding the libvirt event loop into the GLib main loop, and translating libvirt errors into GLib errors.

- libvirt-gconfig: an API for generating and manipulating libvirt XML documents. It removes the need for application programmers to directly deal with raw XML themselves.

- libvirt-gobject: an API which translates the libvirt object model, also integrating them with the lbivirt-gconfig APIs.

- libvirt-sandbox: an API for building application sandboxes using virtualization technology.

GTK layer

- gtk-vnc: an API building on gvnc providing a GTK widget which acts as a VNC client. This is used in both virt-manager & virt-viewer

- spice-gtk: an API building on spice-glib providing a GTK widget which acts as a SPICE client. This is used in both virt-manager & virt-viewer

Applications

- python-virtinst: provides the original python virt-install command line tool, as well as a python API which is leveraged by virt-manager. The python-virtinst internal API was the motivation behind the libosinfo library and libvirt-gconfig library

- virt-manager: provides a general purpose desktop application for interacting with libvirt managed virtualization hosts. The virt-manager internal API was the motivation behind the libvirt-gobject library

- oVirt: the umbrella project for building an open source virtualized data center management application. Its VDSM component uses the libvirt python language bindings for managing KVM hosts

- OpenStack: the umbrella project for building an open source cloud management application. Its Nova component uses the libvirt python language bindings for managing KVM, Xen and LXC hosts.

- GNOME Boxes: the new GNOME desktop application for running virtual machines and accessing remote desktops. It uses libirt-gobject, libosinfo, gtk-vnc & spice-gtk via automatically generated vala bindings.

The Future

- Get oVirt, OpenStack, python-virtinst and virt-manager using the libosinfo library to centralize definitions of what hardware config to use for deploying operating systems

- Get oVirt & OpenStack using the libvirt-gconfig library to generate configuration, instead of building XML documents up through string concatenation

- Convert python-virtinst & virt-manager to use the libvirt-gconfig, libvirt-gobject libraries instead of their private internal equivalents

- Create a remote-viewer library which pulls in both gtk-vnc and spice-gtk in a higher level framework. This is essentially pulling the commonality out of virt-viewer, virt-manager and GNOME boxes use of gtk-vnc and spice-gtk.

- Create a libvirt-install library which provides APIs for provisioning operating systems. This would be pulling out commonality between the way python-virtinst, GNOME boxes and other applications deploy new operating systems. This would be a bridge layer between libosinfo and libvirt-gobject

There is undoubtably plenty of stuff I left out of this diagram & description. For example there are many other data center & cloud management projects that are based on libvirt, which I left out for clarity. There are plenty more libvirt plugins for other applications too, many I will never have heard about. No doubt our future plans will change too, as we adapt to new information. This should have given a good overview of how broad the open source virtualization tools software development ecosystem has become.