Provisioning KVM virtual machines on iSCSI with QNAP + virt-manager (Part 2 of 2)

Part I of this posting, walked through the steps to create a iSCSI target and LUN on a QNAP server. Part II now considers provisioning guests using iSCSI storage from virt-manager.

Storage pool management

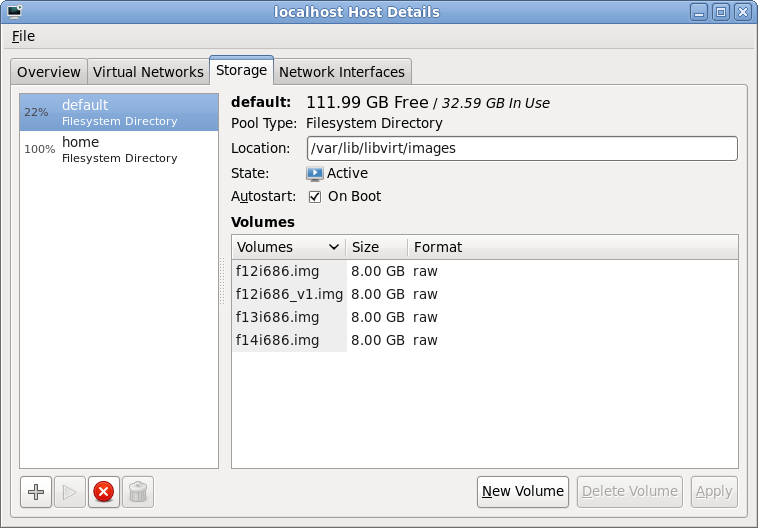

After launching virt-manager and connecting to the desired hypervisor, QEMU/KVM in my case, the first step is to open up the storage management pane. From the main virt-manager window, this can be found by selecting the menu “Edit -> Host Details” and then navigating to the “Storage” tab. The host shown here already has two storage pools configured, both pointing at local filesystem directories. The “default” storage pool will usually be visible on all libvirt hosts managed by virt-manager and lives in /var/lib/libvirt/images. This isn’t much use if you plan to migrate guests between machines, because some form of shared storage is required between the hosts. This is where iSCSI comes into the equation. To add a iSCSI storage pool, click the “+” button in the bottom-left of the window

Adding a storage pool

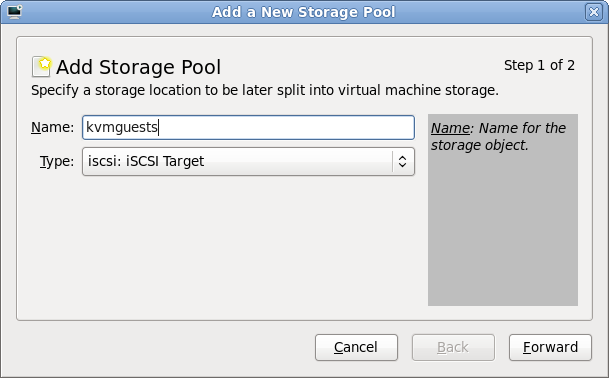

The first stage of setting up a storage pool is to provide a short name and select the type of storage to be accessed. For sake of consistency this example gives the storage pool the same name as the iSCSI target previously configured on the QNAP.

Entering iSCSI parameters

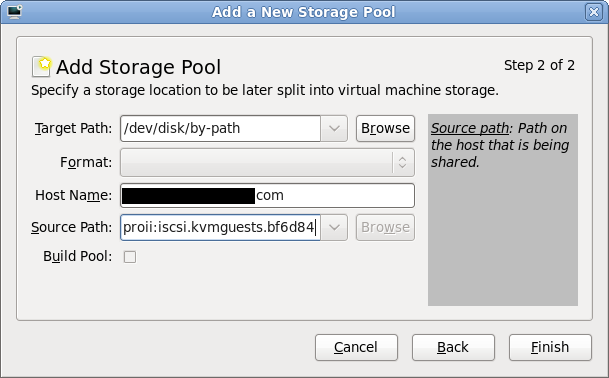

The “Target path” determines how libvirt will expose device paths for the pool. Paths like /dev/sda, /dev/sdb, etc are not a good choice because they are not stable across reboots, or across machines in a cluster, the names are assigned on a first come, first served basis by the kernel. Thus virt-manager helpfully suggests that you use paths under “/dev/disk/by-path”. This results in a naming scheme that will be stable across all machines. The “Host Name” is simply the fully qualified DNS name of the iSCSI server. Finally the “Source Path” is that adorable IQN seen earlier when creating the iSCSI target (“iqn.2004-04.com.qnap:ts-439proii:iscsi.kvmguests.bf6d84“)

Browsing iSCSI LUNs

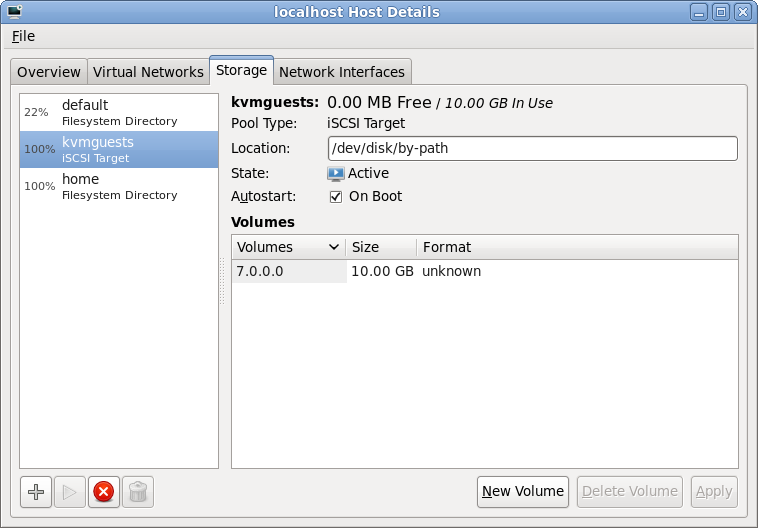

If all went to plan, libvirt connected to the iSCSI server and imported the LUNs associated with the iSCSI target specified in the wizard. Selecting the new storage pool, it should be possible to see the LUNs and their sizes. These steps can be repeated on other hosts if the intention is to migrate guests between machines. Obviously care should be taken to not run the same VM on two machines at once though. Ideally use clustering software to protect against this scenario.

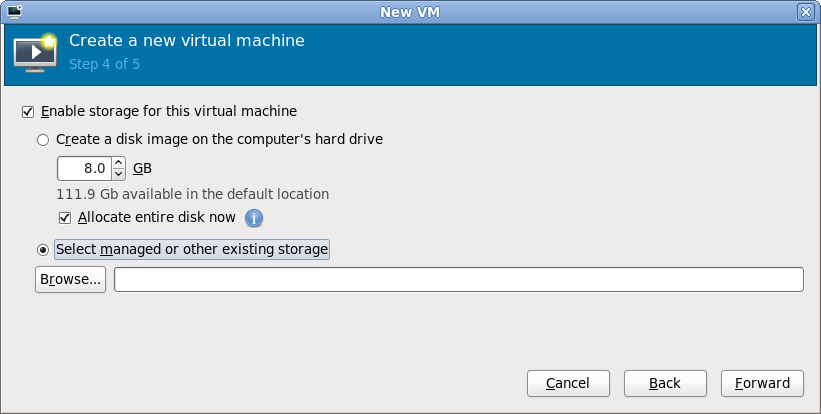

Selecting storage for a new VM

With the iSCSI storage pool configured in virt-manager, it is now possible to create a guest. After breezing through the first couple of steps in the “New VM wizard”, it is time to specify what storage to use for the new guest. By default virt-manager will allocate storage from the local filesystem. This isn’t what we want now, so go for the “Select managed or other existing storage” option and hit the “Browse” button

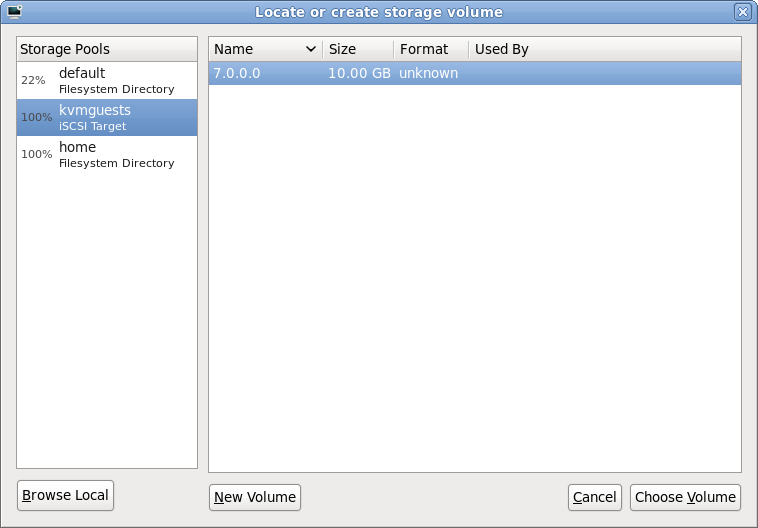

Browsing iSCSI LUNs for the new VM

The dialog that has appeared shows all the storage pools this libvirt connection knows about. This should match the pools seen a short while ago when configuring the iSCSI storage pool. It should be fairly obvious what todo at this point, select the iSCSI storage pool and the desired LUN (volume) within it and press “Choose Volume”

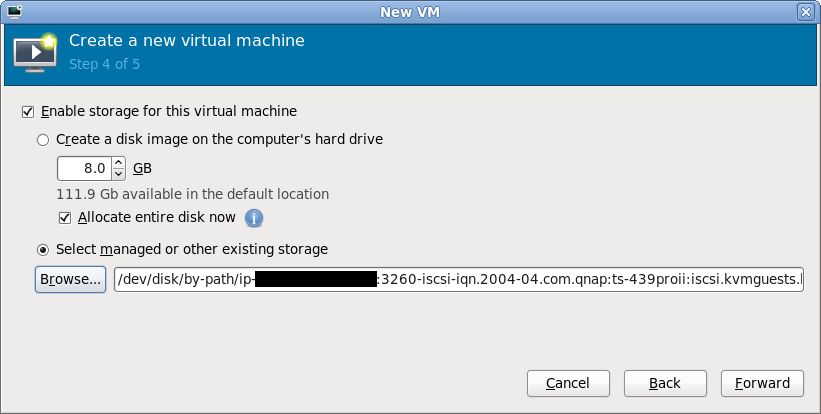

Continuing the new VM wizard

After choosing an iSCSI LUN, its path should now be displayed. It’ll be a rather long and scary looking path under /dev/disk/by-path, don’t stare at it for too long. Just continue with the rest of the new VM wizard in the normal manner and the guest will shortly be up & running using iSCSI storage for its virtual disk.

Final words

Hopefully this quick walkthrough has shown that provisioning KVM guests on Fedora 12 using iSCSI is as easy as 1..2..3.. The hard bit is probably going to be on your iSCSI server if you don’t have a NAS with a nice administrative interface like the QNAP’s. The libvirt storage pool management architecture includes support for actually constructing storage pools & allocating LUNs. For a LVM storage pool libvirt would do this using vgcreate and lvcreate respectively. The iSCSI protocol standard does not support these kinds of operations but many vendors provide ways todo this with custom APIs, for example, Dell EqualLogic iSCSI arrays have a SSH command shell that can be used for LUN creation/deletion. It would be desirable to add support for these vendor specific APIs for LUN creation/deletion in libvirt. It could even be possible to support iSCSI target creation from libvirt if suitable APIs were available. This would dramatically simplify the steps required to provision new guests on iSCSI by enabling everything to be done from virt-manager, without the need to touch the NAS admin interfaces.

[…] With the iSCSI target and LUN created, it is now time to provision a new KVM guest using this storage. This is the topic of Part II […]

[…] previous articles showed how to provision a guest on iSCSI the nice & easy way using a QNAP NAS and virt-manager. […]

A few questions:

1. Can virt-manager cope with iSCSI with dm-multipath on top?

2. Can you deal with (clustered) LVM on top of iSCSI? This would give you all the snapshotting, replication and migration features using the standard LVM toolset on cheap (even Linux) iSCSI targets.

3. If clustered LVM makes your head explode, can libvirt do storage migration itself?

1. libvirt has basic support for multipath devices, but I’m not sure whether its wired up into virt-manager yet

2. yes, you can setup LVM ontop of iSCSI (or any other type of block device)

3. there’s no support for either cluster LVM or storage migration at this time.

Exceeding great howto! Thank you so much for creating it!

About using LUNs in QNAP: As you mentioned in the first blog, a LUN file in created on the EXT4 file system. I suspect this will be very slow! I noticed it also does that if you add an iSCSI target to drives that already are formatted. =(

So I am curious why you don’t connect the iSCSI target to the KVM host, and create an LVM Volumen Group directly on the iSCSI target?

Wouldn’t that allow you to use awesome LVM backup tools like KeepSafe and Tartarus, and you won’t have to login to QNAP to create new LUNs?

Disclaimer: I am new to iSCSI and libvirt storage pools. Are there situations were LUNs are “the right thing(TM)” to use? Or does LUNs make more sense on real SANs?

Keep up the great blog posts =)

There is no ‘right way’ to do iSCSI – there are several options based on your deployment needs / architecture. The primary downside to exporting a single LUN and then running LVM on top of it, is that it complicates multi-host deployments. You have to use Cluster-LVM, instead of regular LVM, to ensure consistency across all hosts which can see the volume group. This in turn requires that you run ClusterSuite software & ideally have hardware fencing capabilities for all your hosts. Of course you may well *want* such an arrangement for the sake of high availability in a data center, but for me at home it is not practical. My virtualization “hosts” are mostly my laptops and thus its not appropriate to run clustering software on them. One iSCSI LUN per virtual guest disk becomes the main viable architecture.

Btw. The programs I mentioned was

http://safekeep.sourceforge.net/

http://wertarbyte.de/tartarus.shtml

Safekeep is in Fedora, but Tartarus is for some reason not.

I had no idea that LVM could be used with clusters. In my case would I prefer to have just one LUN with LVM for all hosts. I have now enabled clvmd (cluster LVM daemon) on 2 Fedora guests and on my Fedora 13 desktop, which is the KVM host.

All 3 hosts can now see the same LVM Volume Group. If host ‘A’ have a guest running in a Logical Volume, can I then on host ‘B’ make a snapshot of that Logical Volume? Or do I have to make the snapshot on host ‘A’ because the guest using that LV is on host ‘A’?

If you have cluster LVM enabled, my understanding is that changes on any host will be immediately replicated to all hosts. Best check the docs on this though.

From http://www.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/5.4/html/Logical_Volume_Manager_Administration/snapshot_command.html

“LVM snapshots are not supported across the nodes in a cluster. You cannot create a snapshot volume in a clustered volume group.”

I guess that’s not possible then =(

Ok, now I am ready to deploy your howto.

I have 1 QNAP and 3 KVM hosts. I will give each KVM host a LUN with LVM inside.

Are you aware of pros and cons in using “1 target + 3 LUNs” or “3 targets + 3 LUNs”?

What would you recommend?

If you have multiple hosts and want different LUNs visible on each host, then you might want multiple targets – each host would login to a different target, thus seeing different LUNs. If you want all your hosts to always see the same LUNs, then 1 target with multiple LUNs would work just fine. Personally I just use one target, many LUNs.

Excellent. Thanks a lot!

Okay, now I have connected a target with 1 LUN which contains an LVM partition, which contains a Volume Group (called vg00)with 1 Logical Volume.

Now I would like to add this LVM partition to libvirt’s storage pool, so I select LVM in your “Adding a storage pool”.

Then I have to enter a path for the Volume Group.

The problem is that I don’t see /dev/vg00 as I would expect. I have a local LVM partition called “vol” and I can add that by /dev/vol to libvirt, and it works perfectly in virt-manager.

The path for vg00 is

/dev/disk/by-path/ip-192.168.0.4:3260-iscsi-iqn.2004-04.com.qnap:ts-509:iscsi.linux02.ba4731-lun-0-part1

But I can’t enter that. virt-manager file chooser wants a directory, not a file.

Do you know how to add this Volume Group as a storage pool?

Okay, I sort of found out the solution =)

vgchange -ay vg00

gives me /dev/vg00

But it doesn’t happen by it self after reboot, and suspect it should happen quite early in the boot sequence, so adding it to rc.local wouldn’t probably be early enough for libvirt?

What would you do in this situation?

That shouldn’t be required subsequently, since libvirt automatically runs ‘vgchange -ay $NAME’ when it starts a LVM storage pool. In fact it shouldn’t really have been required in the first instance either. libvirt has a method to discover valid storage pool sources and thus in theory virt-manager should have been able to present you with a list of LVM pool names automatically.

If I don’t run “vgchange -ay vg00” then I don’t get /dev/vg00. When I remote /dev/vg00 is gone. Can I add an LVM storage pool in virt-manager without the /dev/vg00 path?

I use:

localhost:~# rpm -qa|grep virt|sort

libvirt-0.6.3-33.el5_5.3

libvirt-0.6.3-33.el5_5.3

libvirt-python-0.6.3-33.el5_5.3

python-virtinst-0.400.3-9.el5

virt-manager-0.6.1-12.el5

virt-viewer-0.0.2-3.el5

localhost:~#

Looking at /etc/rc3.d/

S02lvm2-monitor -> ../init.d/lvm2-monitor*

S07iscsid -> ../init.d/iscsid*

S13iscsi -> ../init.d/iscsi*

S97libvirtd -> ../init.d/libvirtd*

Could the problem be, that lvm2-monitor is started before iscsi?

“Obviously care should be taken to not run the same VM on two machines at once though. Ideally use clustering software to protect against this scenario.” Have you found a good clustering solution for this? I have VM live migration with iSCSI working just great, but the ability to start a VM on multiple nodes is a big concern for production systems.

I had my eye on the Ovirt project, but it seems to have died down a bit.

Hi Daniel, Great tutorial. There are a couple of us having and issue with virt-manager and iscsi. I have added my issues to the bugzilla , I was just wondering if you had seen these issues with recent versions of libvirt/virt-manager

https://bugzilla.redhat.com/show_bug.cgi?id=669935

Hi Daniel,

I just discovered your website – great!

I started to install and understand KVM/QEMU about 2 weeks ago and I am quite happy with it (my previous setup was on a virtualbox-platform).

Now I am running 3 KVM-hosts that shall be configured to be able to migrate all installed VMs across each other. All hosts are working very well and even the migration of file-based VMs is working without any problems.

However, I still have an iSCSI-migration issue on which I’s like to ask your advice:

I configured and installed several VMs that are running with iscsi-storage (previously configured in the storage-pool w/o problems). Now, when I try to migrate from one host to another one, I am getting the error message as follows: “Guest could not be migrated: Cannot access storage file ‘/dev/disk/by-path/’ (as uid:0, gid:0): file or directory not found”

In qemu.conf I have configured user=”root” and group=”root”, as above uid and gid msg. also indicates. Even though I am continously getting this error.

Obviously it’s an authorization problem … – so your advice on this is very much appreciated.

Thank you much!